Xingzhao Liu

AirSpatialBot: A Spatially-Aware Aerial Agent for Fine-Grained Vehicle Attribute Recognization and Retrieval

Jan 04, 2026Abstract:Despite notable advancements in remote sensing vision-language models (VLMs), existing models often struggle with spatial understanding, limiting their effectiveness in real-world applications. To push the boundaries of VLMs in remote sensing, we specifically address vehicle imagery captured by drones and introduce a spatially-aware dataset AirSpatial, which comprises over 206K instructions and introduces two novel tasks: Spatial Grounding and Spatial Question Answering. It is also the first remote sensing grounding dataset to provide 3DBB. To effectively leverage existing image understanding of VLMs to spatial domains, we adopt a two-stage training strategy comprising Image Understanding Pre-training and Spatial Understanding Fine-tuning. Utilizing this trained spatially-aware VLM, we develop an aerial agent, AirSpatialBot, which is capable of fine-grained vehicle attribute recognition and retrieval. By dynamically integrating task planning, image understanding, spatial understanding, and task execution capabilities, AirSpatialBot adapts to diverse query requirements. Experimental results validate the effectiveness of our approach, revealing the spatial limitations of existing VLMs while providing valuable insights. The model, code, and datasets will be released at https://github.com/VisionXLab/AirSpatialBot

Wideband Channel Estimation for mmWave MIMO Systems with Beam Squint

Sep 06, 2022

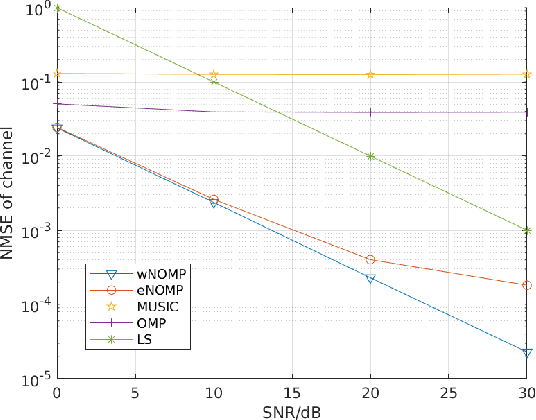

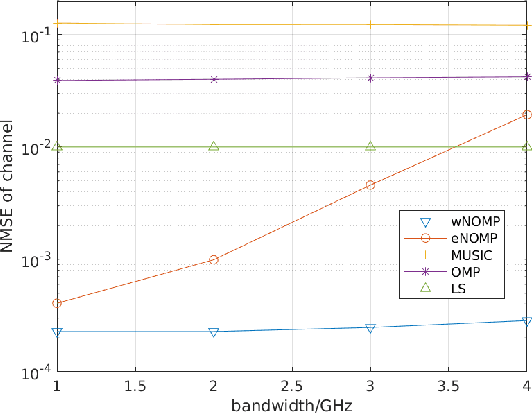

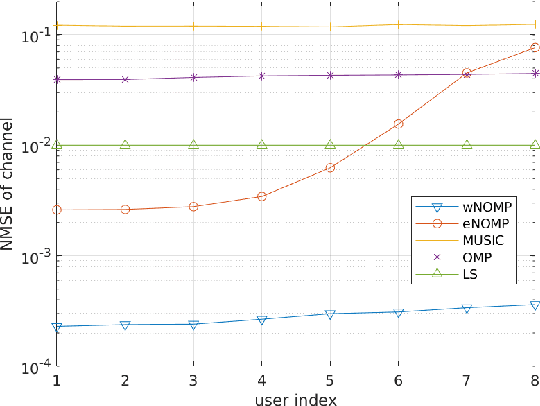

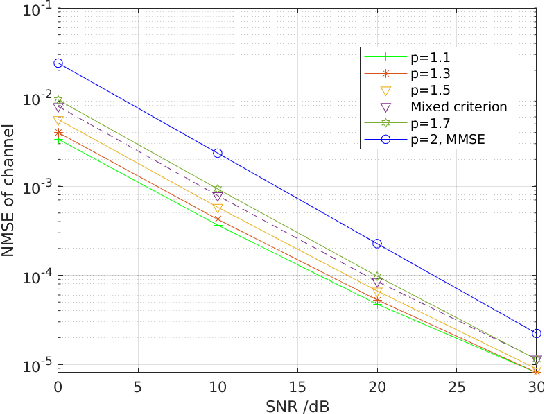

Abstract:With the scale of antenna arrays and the bandwidth increasing, many existing narrowband channel estimation methods ignoring the effect of beam squint may face severe performance degradation in wideband millimeter-wave (mmWave) communication systems. In this letter, a wideband Newtonized orthogonal matching pursuit (wNOMP) algorithm has been proposed to perform channel estimation. The proposed method based on the minimum mean square error (MMSE) criterion is optimal for Gaussian noise. Considering real communication systems, it is common that the noise follows a non-Gaussian distribution. Accordingly we extend the wideband channel estimation method via the minimum $\ell_p$-norm criterion which enhances the robustness against the non-Gaussian noise. Simulations have been conducted to validate the superiority of the proposed method over other representative methods.

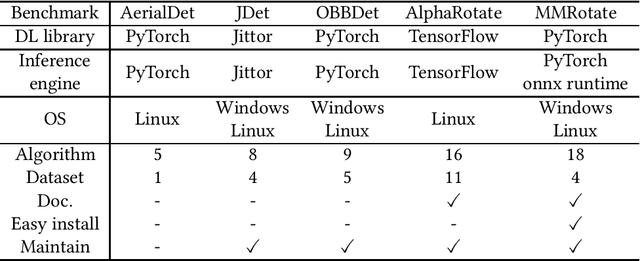

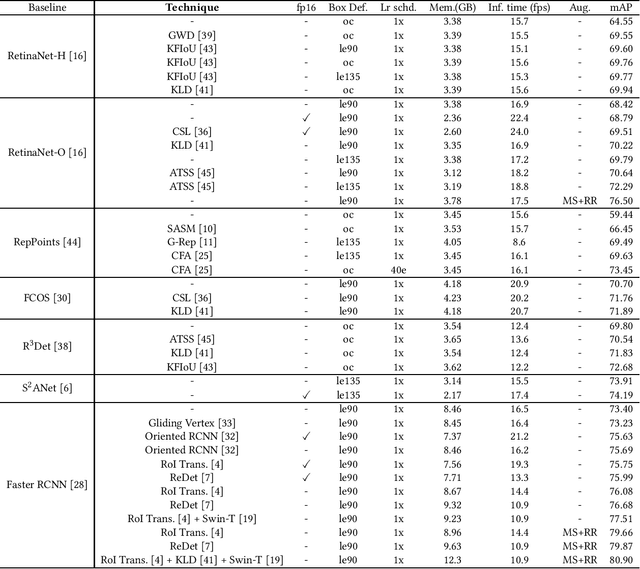

MMRotate: A Rotated Object Detection Benchmark using Pytorch

Apr 28, 2022

Abstract:We present an open-source toolbox, named MMRotate, which provides a coherent algorithm framework of training, inferring, and evaluation for the popular rotated object detection algorithm based on deep learning. MMRotate implements 18 state-of-the-art algorithms and supports the three most frequently used angle definition methods. To facilitate future research and industrial applications of rotated object detection-related problems, we also provide a large number of trained models and detailed benchmarks to give insights into the performance of rotated object detection. MMRotate is publicly released at https://github.com/open-mmlab/mmrotate.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge